Code generation. Reimagined.

BirdMind is BrinPage’s first domain-specific model: built to generate clean, production-ready code that integrates seamlessly into modern applications. Not a general assistant, but a focused engine — designed for companies that need precision, speed, and reliability at scale.

Trained for products. Not playgrounds.

Most code models generate fragments. BirdMind is trained for complete systems — the frameworks, libraries, and infrastructure that modern companies rely on every day. From secure authentication to data pipelines, from orchestration to UI — it’s built for real-world deployment, not toy examples.

- Datasets curated from real products and production environments.

- Understands project structure through AST indexing and retrieval.

- Optimized for context-aware edits and scalable workflows.

- Evaluated against industry benchmarks and enterprise use cases.

Focused today. Expanding tomorrow.

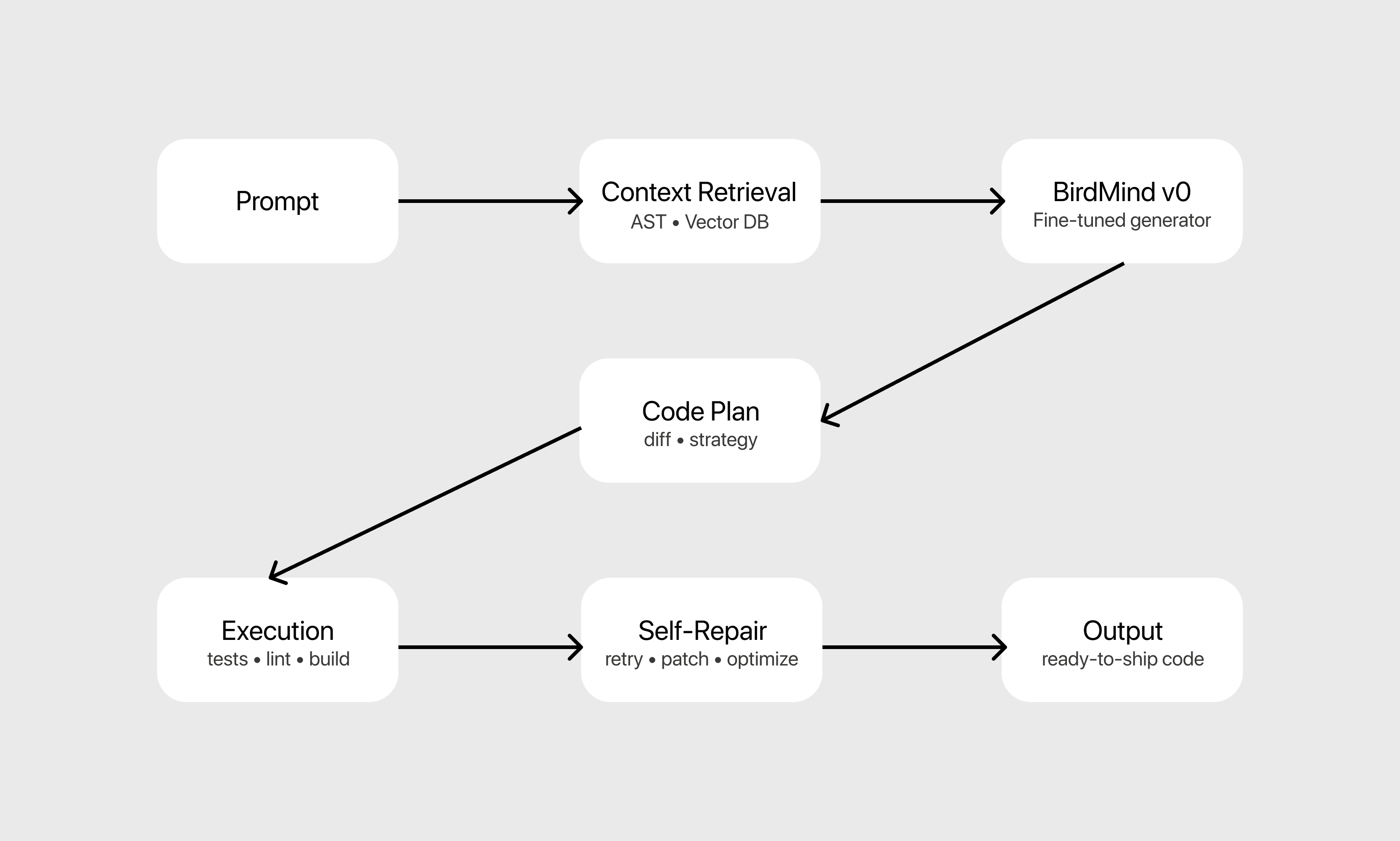

The system, at a glance.

BirdMind combines retrieval-augmented generation, a fine-tuned coding model, and an execution loop that validates and improves results before returning production-ready code. Each layer is purpose-built to reduce errors, handle complexity, and integrate seamlessly into enterprise workflows.

Qwen2.5-Coder-14B — a state-of-the-art code model with strong performance on HumanEval, MBPP, and SWE-bench. 32k context window, permissive license, and a balance of capability and efficiency.

Future roadmap: larger variants (32B) if compute allows, or lightweight fallbacks for faster inference.

Open foundations

- The Stack v2 — curated OSS corpus

- CodeSearchNet / MBPP+ — tasks & solutions

- HumanEval+ / EvalPlus — function synthesis

BirdMind datasets

- Production repositories with frameworks, databases, auth, and payments

- Commit diffs capturing issue → change → test

- Prompt ↔ code ↔ repair traces aligned with development workflows

Focus: not just algorithms, but how real software products are built and maintained.

Training that builds reliable code intelligence

BirdMind is trained to understand and generate real-world applications. The process is divided into three stages: supervised fine-tuning, alignment with developer preferences, and reinforcement guided by execution. Reward signals are derived from passing tests, successful compilation, and clean linting in a secure, isolated environment.

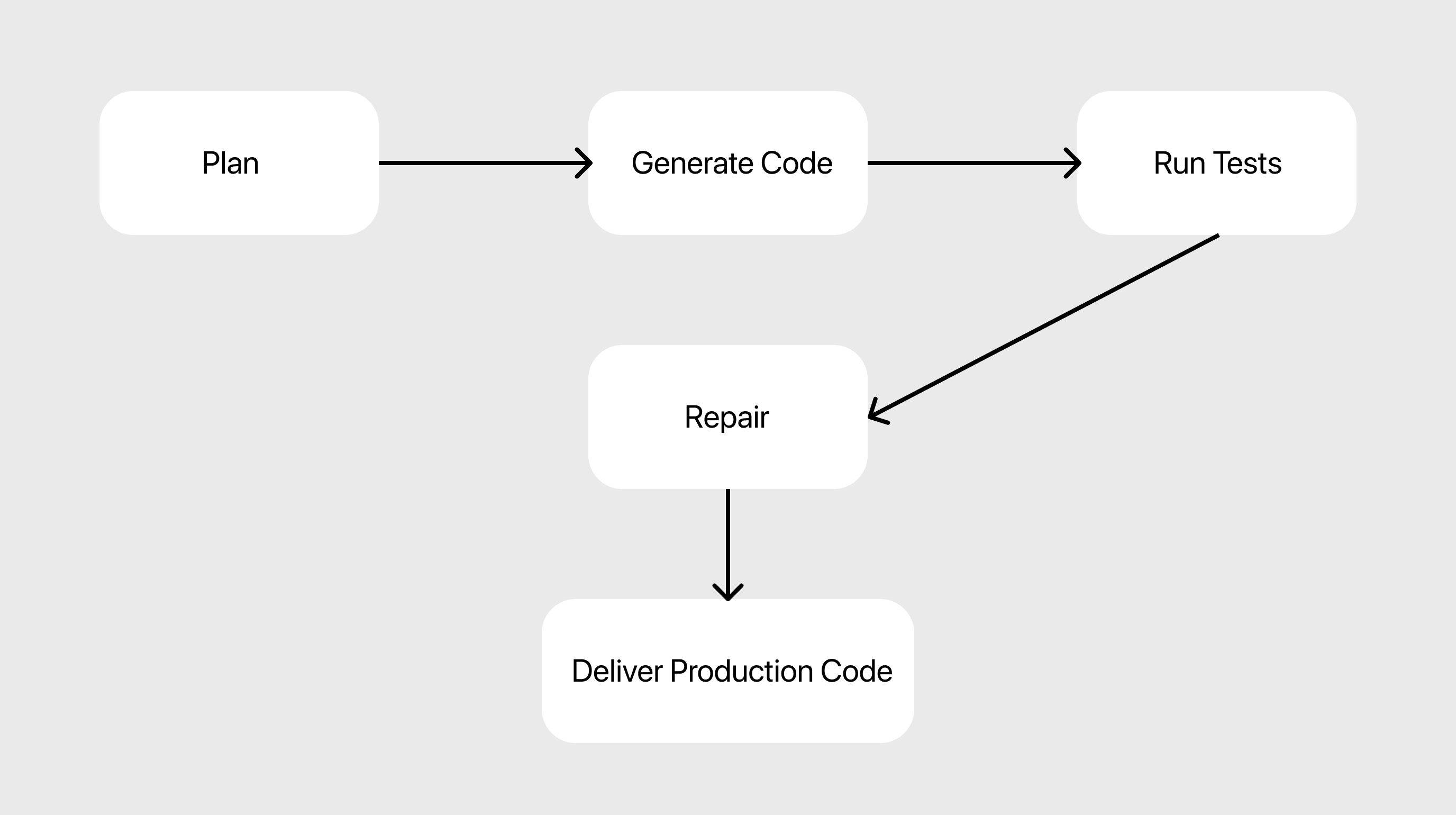

An agent that accelerates real-world development.

BirdMind operates like your most reliable teammate: it interprets your intent, plans the implementation, generates precise code, and verifies it through automated testing. Any issues are automatically detected and corrected, ensuring production-ready results every time.

Built for secure execution.

Every action BirdMind performs happens in a tightly controlled environment. Execution is fully isolated with Docker, network access is blocked, and only trusted packages from signed mirrors are allowed. Secrets are detected and blocked before entering the sandbox.

- Network‑isolated sandbox (seccomp/AppArmor)

- Package allowlist from signed mirrors

- Automated secret scanning (truffleHog / gitleaks)

- Strict resource caps on CPU, memory, and time

- No outbound network access

- Install packages only from signed mirrors

- Run tests with pytest/vitest; lint with eslint/mypy/ruff

- Automatic kill-switch on resource cap breach

- Immutable logs for audit and complianceOpenAI‑compatible API, ready to integrate.

BirdMind provides a drop‑in API compatible with OpenAI endpoints. Integrate seamlessly with your existing SDKs and workflows—no changes required.